Meta-Learning for Question Answering on SQuAD 2.0

This post was summarized from my final project @Stanford CS224N.

In a Question Answering (QA) system, the system learn to answer a question by properly understanding an associated paragraph. However, developing a QA system that performs robustly well across all domains can be extra challenging as we do not always have abundant amount of data across domains. Therefore, one area of focus in this field has been learning to train a model to learn new task with limited data available (e.g. Few-Shot Learning, FSL).

Meta-learning in supervised learning, in particular, has been known to perform well in FSL, with the concept being teaching the models learn to set up initial parameters well that enable the model to learn a new task after seeing a few samples of the associated data.

- MAML models from scratch

- MAML models after baseline model was pre-trained and fine-tuned

Background

SQuAD 2.0 dataset. Three in‐domain (SQuAD, NewsQA, Natural Questions) and three out‐of‐domain (DuoRC, RACE, RelationExtraction) datasets. The in‐domain (IND) and out‐of‐domain (OOD) datasets contain 50K and 127 question‐passage‐answer samples each.

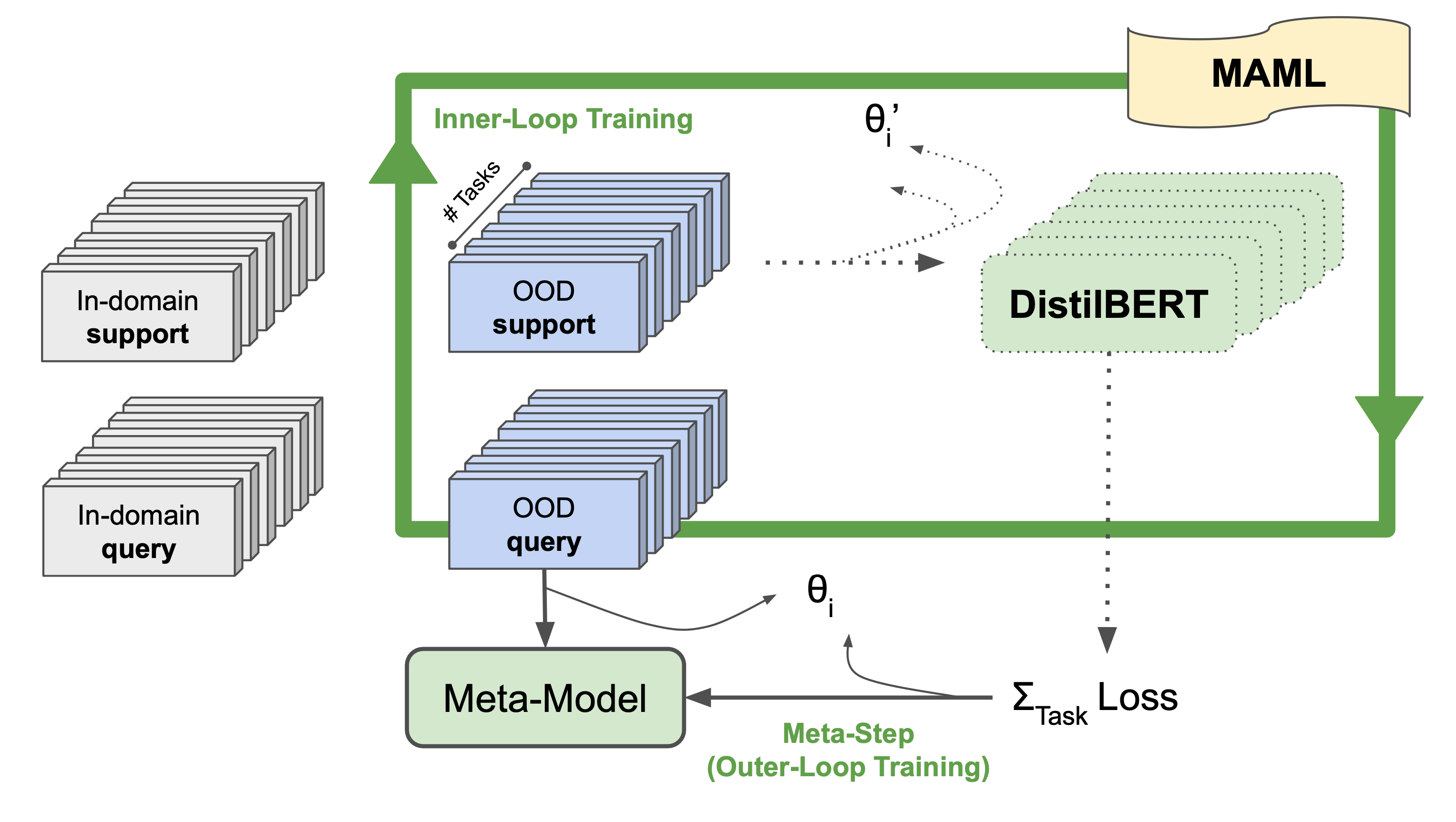

Model‐Agnostic Meta‐Learning (MAML). MAML was originally proposed by Finn et al 2017

Methods

Fine-tuned Baseline. A fine‐tuned (FT) pre‐trained transformer model ‐ DistilBERT.

MAML DistilBERT. We adapted MAML

-

We defined the baseline DistilBERT

as our base learner ($f_{\theta}$) -

We implemented a task method rather than to pre-define a K-shot task pool ($p(\mathcal{T})$). As K sample support ($\mathcal{D}_i$) and query ($\mathcal{D}_i$’ ) sets can come from IND and OOD training datasets in different experiments

-

We used the same loss function ($\mathcal{L}$, $\textbf{loss} = - \log p_{start}(i) - \log p_{end}(j)$) as the baseline

FT Baseline + MAML DistilBERT. In addition to training MAML model from scratch, we leveraged the FT DistillBERT (Baseline) model and trained the MAML models from the FT checkpoint.

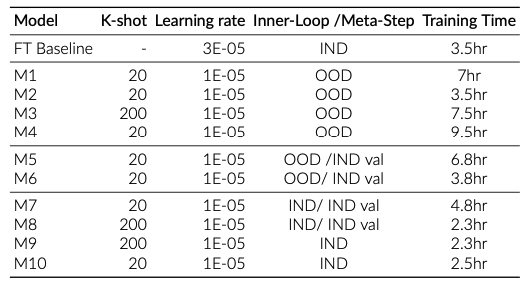

Experiments

If not otherwise specified, batch size for all experiments were 16. To avoid GPU out-of-memory issue, data was loaded in either batch size of 1 or 4 to accumulate the loss. Model is updated at batch size of 16.

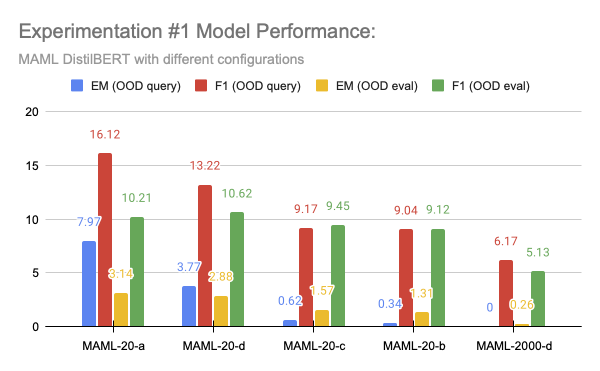

Experiment #1: MAML DistilBERT without FT Baseline

- K-shot: MAML-20-d vs. MAML-2000-d

- learning rate: MAML-20-a vs. MAML-20-b vs. MAML-20-d

- domain variability in training support: MAML-20-b vs. MAML-20-c

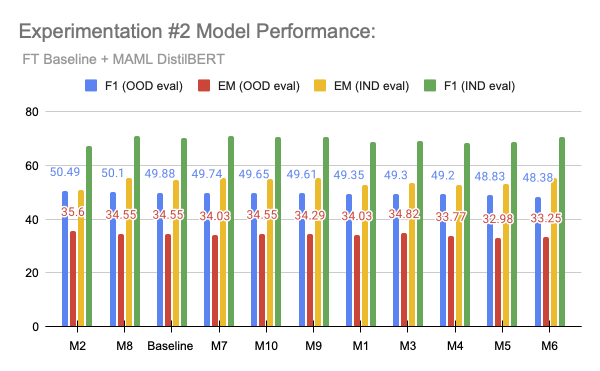

Experiment #2: Training MAML after FT Baseline

- K-shot: M1/2/4 vs. M3, M7 vs. M8, M9 vs. M10

- IND or OOD for MAML training: M1 vs. M6 vs. M7 vs. M10, M2 vs. M5 vs. M7 vs. M10

- training time: M1 vs. M2 vs. M4, M5 vs. M5

Analysis

Key-takeaway #1: MAML DistilBERT without FT Baseline couldn’t achieve the same level of model performance as the FT Baseline.

- This can be because of the large IND data available during baseline model pre-training/fine-tuning.

- Larger learning rate helped in faster adaptation with the MAML model given the same sample size as it allowed more aggressive exploration in the gradient at the beginning.

- Larger domain variability in support/query reached similar F1 performance but lower EM performance. This was intuitive as the MAML was learning to learn and exposed to a lot of topics as few-shot learning though benefit understanding synergies across domains, the model also became more “general” and “robust”.

Key-takeaway #2: Training MAML after FT Baseline outperformed FT Baseline occasionally. More experimentation configurations in learning rate and domain variability could be explored.

- M2, a 10-task 20-shot MAML training on OOD samples post pre-training outperformed the FT Baseline in OOD validation set by 1.22% in F1 and 3.04% in EM. Its performance in IND validation set dropped by 4.57% in F1 and 6.49% in EM. This showed the scarification of model performance on the IND datasets in gaining additional robustness on an OOD dataset.

- M8, a 10-task 200-shot MAML training on IND samples post pre-training outperformed the FT Baseline in OOD validation set by 0.44% in F1 and 0% in EM, and in IND validation set by 0.78% in F1 and 1.10% in EM. This showed that continuously training with the same domain datasets with MAML contributed less improvements than training with few OOD samples.

Conclusions

MAML was a good‐to‐explore to achieve cross‐domain model robustness. MAML might not be the best framework in context of a large amount IND set and small amount OOD set. Training MAML post baseline model pre‐training and fine‐tuning performed occasionally better than the FT baseline model likely due to additional OOD tasks used to learn by the MAML model.

Full copy of the paper could be found here.

Github of this project could be found here.