SVD (singular value decomposition)

I have recently started reviewing what I learned from the NLP & deep learning course earlier this year. While reviewing, I found the concept of SVD becoming vague again, so documented it with example codes to keep my knowledge refreshed.

SVD can be quite useful in decomposing word-document or window based co-occurence matrices (\(X\)) into word embeddings (\(U\)).

\begin{equation} \underset{m\times n}{\mathrm{X}} = \underset{m\times k}{U} \times \underset{k\times k}{S} \times \underset{k\times n}{V^T} \end{equation}

Here, we’re saying that we can make every matrix X into an orthogonal matrix (\(U_{m \times k}\)), a diagonal matrix (\(S_{k \times k}\)) and another orthogonal matrix (\(V^T_{k \times n}\)).

\[\mathrm{X}^T\mathrm{X} = (\mathrm{V}\mathrm{S}^T\mathrm{U}^T)\mathrm{U}\mathrm{S}\mathrm{V}^T = \mathrm{V}(\mathrm{S}^T\mathrm{S})\mathrm{V}^T = \mathrm{V}(\mathrm{S}^T\mathrm{S})\mathrm{V}^{-1}\] \[\mathrm{X}\mathrm{X}^T = (\mathrm{U}\mathrm{S}\mathrm{V}^T)\mathrm{V}\mathrm{S}^T\mathrm{U}^T = \mathrm{U}(\mathrm{S}^T\mathrm{S})\mathrm{U}^T = \mathrm{U}(\mathrm{S}^T\mathrm{S})\mathrm{U}^{-1}\]As the above equations hold, we can see that:

- \(\mathrm{S}^T\mathrm{S}\) is the matrix of eigenvalues (\(\sigma_{k}^2\)) on the diagonal

- \(\mathrm{V}\) is the matrix of eigenvectors for \(\mathrm{X}^T\mathrm{X}\)

- \(\mathrm{U}\) is the matrix of eigenvectors for \(\mathrm{X}\mathrm{X}^T\)

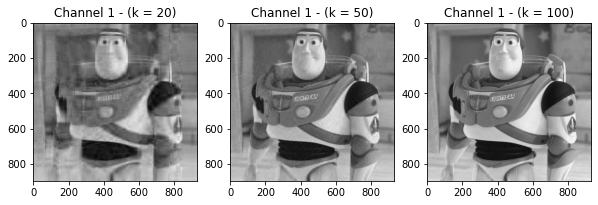

Here if we set k to be less than m, we’re trying to use SVD to reduce the dimension from the original matrix U from m to the k most important singular vectors (eigenvectors).

Taken a single channel buzz-light year image, and conduct SVD on top of it then recompose the image (\(X'\)) with the first k singular values in \(U\) and \(V^T\) and eigenvalues in \(S^TS\). We’d see that as k increased, the image became more toward the original image. While a small k value as 20 already extracted the key components of the original buzz image fairly well:

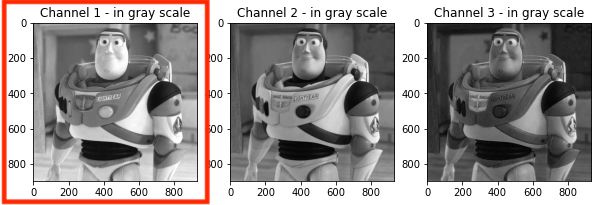

Original image in Channel 1

Decomposed images in Channel 1 with different k values

Example notebook with above example can be found here.

Formal notes from CS224N can be referenced here. I also found the MIT OpenCourse lectors by Gilbert Strang very useful: Eigenvalues and Eigenvectors, SVD.